This idea must die: “AI will outsmart the human race”

Professor Anna Korhonen says AI is a long way from replacing the core elements that make us uniquely human.

Arnold Schwarzenegger in The Terminator, Alicia Vikander in Ex Machina, and Hugo Weaving in The Matrix: sci-fi movies have a lot to answer for when it comes to our perception that artificial intelligence will come to replace human beings. And while I cannot say that there is no way that machines will ever outsmart humans, I can categorically say that there is no hint of consciousness in any of the AI mechanisms currently out there – or on the horizon of our research.

Media headlines like “ChatGPT bot passes law school exam” and “Will AI image creation render artists obsolete?” reinforce the idea that there are no limits to what AI can do. Yes, ChatGPT can create poetry or pass exams, but it is only repeating back knowledge that already exists. And although it uses a degree of human supervision so it appears more tailored in its response, it cannot empathise or replicate the human experience of the social and physical world.

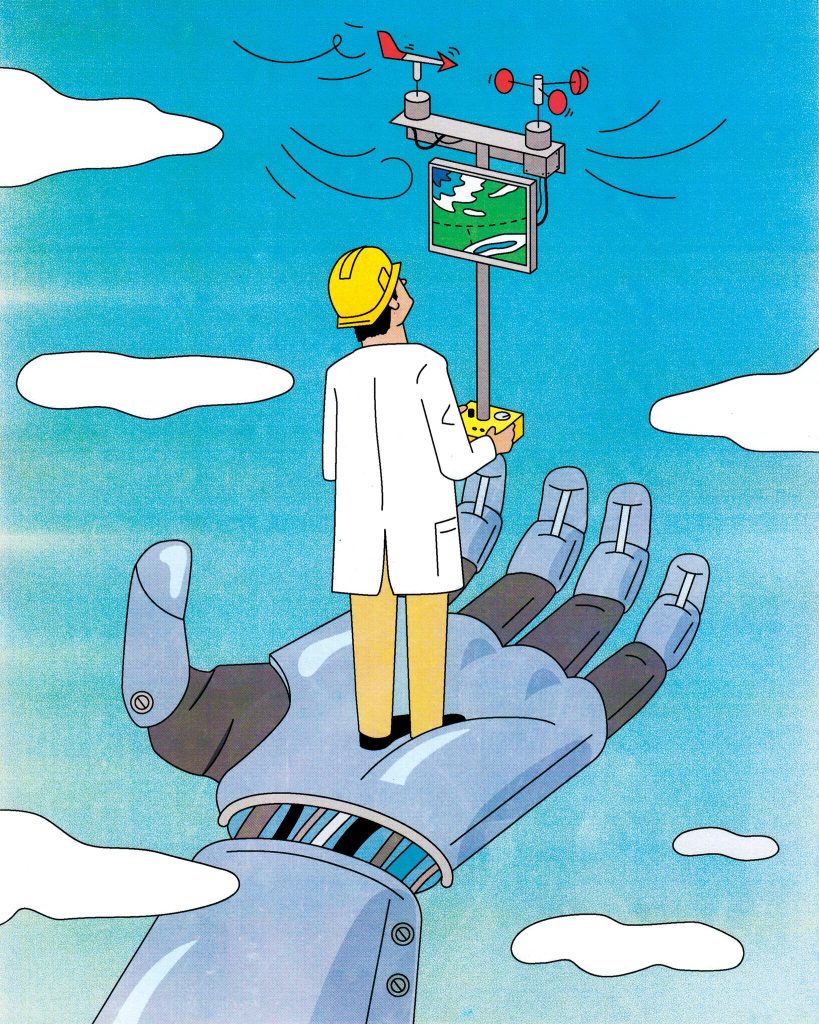

Then there is the fear that “AI is coming for white-collar jobs”. While AI will replace some jobs, the World Economic Forum recently forecast that it will create many new jobs. This technology can also be used to improve the quality of people’s work. No one would argue against the use of machines in dangerous jobs, and there is great potential to use AI to maximise human potential and minimise risk.

As Professor of Natural Language Processing, I am careful with the words I use, and I don’t like saying never! We are our own worst enemy and our greatest fear is that we create another entity who can turn against us. But I am happy to say that from a technical standpoint there is no basis for the current hype around AI outsmarting humans or taking over. We are still far from human-level AI.

The biggest risk is not that AI will take over from the human race, but that it will perpetuate global inequalities and increase risks to privacy

In fact, the positives surrounding AI seem limitless: it can help us address the huge challenge of climate change – by improving climate predictions and helping us identify ways for reducing energy consumption; it is being used to great effect in healthcare and education; and my colleagues are using AI right now to solve really big scientific issues in biomedicine. It seems clear that it’s a very useful tool that, properly applied, benefits humanity.

The technology that allows us to work with artificial intelligence did not come out of nowhere. People have been working on it for decades, but recently the combination of Big Data and greater computing power have led to a series of leaps forward.

We are very nearly at a stage where we are using all the internet knowledge available in the world to build programs. The question is, what do we do then? The opportunity is huge, but the machines still lack the world experience, creativity, empathy, values and social skills of humans.

And AI is not available to everyone. The performance of programs like ChatGPT falls away very rapidly when you move beyond English. If we want AI to benefit humanity we should be actively working towards making it available to all of the world’s population.

Of course, the technologies can also be used for malicious purposes, be that financial fraud, toxic language, fake media and misinformation. So the suggestion that AI will take over from the human race is not the biggest current risk – that it will perpetuate global inequalities and increase the threat to privacy and security are just a couple of examples that are of more pressing concern. In a world where AI is widely available, we need regulation in place. It is up to us to create a responsible ecosystem within which the creators and users of AI operate.

At the moment, the field is undergoing a “big bang”. We are experimenting with many things, and we expect to keep on improving the applications. We urgently need to address the regulation and to ensure that we develop AI to a responsible and inclusive direction. But what makes us uniquely human is currently missing from the human artificial intelligence, and I expect the science to stay that way for a long time to come.

Anna Korhonen is Director of the Centre for Human-Inspired Artificial Intelligence (CHIA) and Professor of Natural Language Processing.