Hidden in plain sight

Machine learning has blurred the line between data and software, unleashing a tsunami of fake news, bots and clickbait. It’s just one of the issues that Neil Lawrence, DeepMind Professor of Machine Learning, says it’s time to tackle.

Call Professor Neil Lawrence on Zoom and you’ll notice, sitting on a shelf behind his desk, an ancient Mac Plus. It was salvaged by Lawrence when he was a PhD student – “the only one of my computer collection I’ve been allowed to retain,” he sighs. “The rest have all gone to the local computer history museum.” Next to the Mac Plus is a Lego Mindstorms robot, a shelf full of books about how the mind works and, he gleefully points out, a framed letter from the University. “We will not be able to offer you an undergraduate place at Downing College,” it reads.

In September 2019, some 30 years after he received that letter, Lawrence became the University’s inaugural DeepMind Professor of Machine Learning and a Professorial Fellow at Queens’. And he’s here to completely rethink the way AI is done. “The next wave in machine learning and AI will be a revisiting of what computer science means,” he says. “We need to re-examine paradigm-shifting computer science, and how we’re doing things, from the ground up.”

Back in the early days of the internet, grandiose promises about how it was going to change all our lives caused the dotcom boom – and crash – of 2001. “Not because the promises were wrong, but because it takes time to work out how to do it. I worry about the problems that have been caused by the rush to deploy and build things before we have a good understanding of how to maximise benefit – and minimise harm,” he says. “Nobody fully understands how all this works – but it’s not sentient. It’s just a bunch of interacting software components doing stuff that behaves in a certain way and that can affect society in dramatic ways.”

Fake news, bots, search engine optimisation, clickbait: they’re all symptoms of the same problem – machine learning blurring the line between software and data. A virus, he explains, works by getting inside the software and persuading it to do something the developer hadn’t intended it to do. It’s why we spend a lot of time and money trying to stop data being interpreted as software. But in machine learning, the parameters of the model come from learning on the data rather than being in the control of the programmer. “That’s a high-level breach of this data/software separation,” says Lawrence. “It’s at the core of many of the challenges we’re going to face – and they’re going to get worse.”

The problem is built into the system, he says. “It’s not a conspiracy by Facebook or Twitter. It’s simply people using real-world data to game a data-driven system, in an adversarial way – making their information more prominent than the information you really want. It’s not new. Remember when Google searches were ranked according to how many links a page had? People simply said: OK, I’ll build a link farm that refers people to my site. And if your system is using real-world data, you can’t precisely program against that with something like ‘If person is good, then trust’. You have to use proxies for ‘goodness’. And people will try to game those proxies. You’re constantly under attack, but the attacks are harder to identify. To recognise when it’s happening, and redeploy, is a huge problem and people are not talking about it enough.”

It’s not a conspiracy – it’s simply using real-word data to game a data-driven system

This kind of real-world application fascinates Lawrence, who has done stints in industry as well as academia. After studying mechanical engineering at Southampton, he headed off to the North Sea to work as a field engineer. “I just love making stuff work,” he says. “Working on the rigs was interesting, but I wanted more. It was pre-internet, I was reading New Scientist and they were talking a lot about neural networks. I bought myself a laptop and started playing around with networks in my downtime.”

In 2000, Lawrence came to Cambridge for a PhD on neural networks. It was a wonderful time to be in the field, he says, with cognitive science, signal-processing physics, applied mathematics and statistics coming together to produce a creative diversity of thinking.

The idea of neural networks – models inspired by the workings of the brain – has been around since pioneers such as Bletchley Park alumni Alan Turing (King’s 1931) and Tommy Flowers began to build machines and models that could emulate logic. “The typical way we get a machine to do something builds on what’s called Von Neumann architecture: we use a programming language to tell the machine what information to put where. A control unit puts stuff in memory, taking it out, doing maths to it – but that’s not how the brain works at all,” he explains. “Brains learn by having synapses – connections – which determine which neurons fire, and learning in the brain occurs by adjusting how strong these connections are. But that’s distributed across our entire bodies. There is no single, centralised intervention point where I can put code in, like there is on a digital machine. So the question is: how do you build these systems that have these distributed characteristics, so different from a classic computer system? In machine learning, the way we’re programming machines is that instead of intervening at any individual point, you’re teaching by showing an input and what you expect the output to be, and then trying to change the parameters across the system to make this happen. By the end of my PhD, I was trying out all sorts of different methods.”

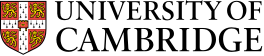

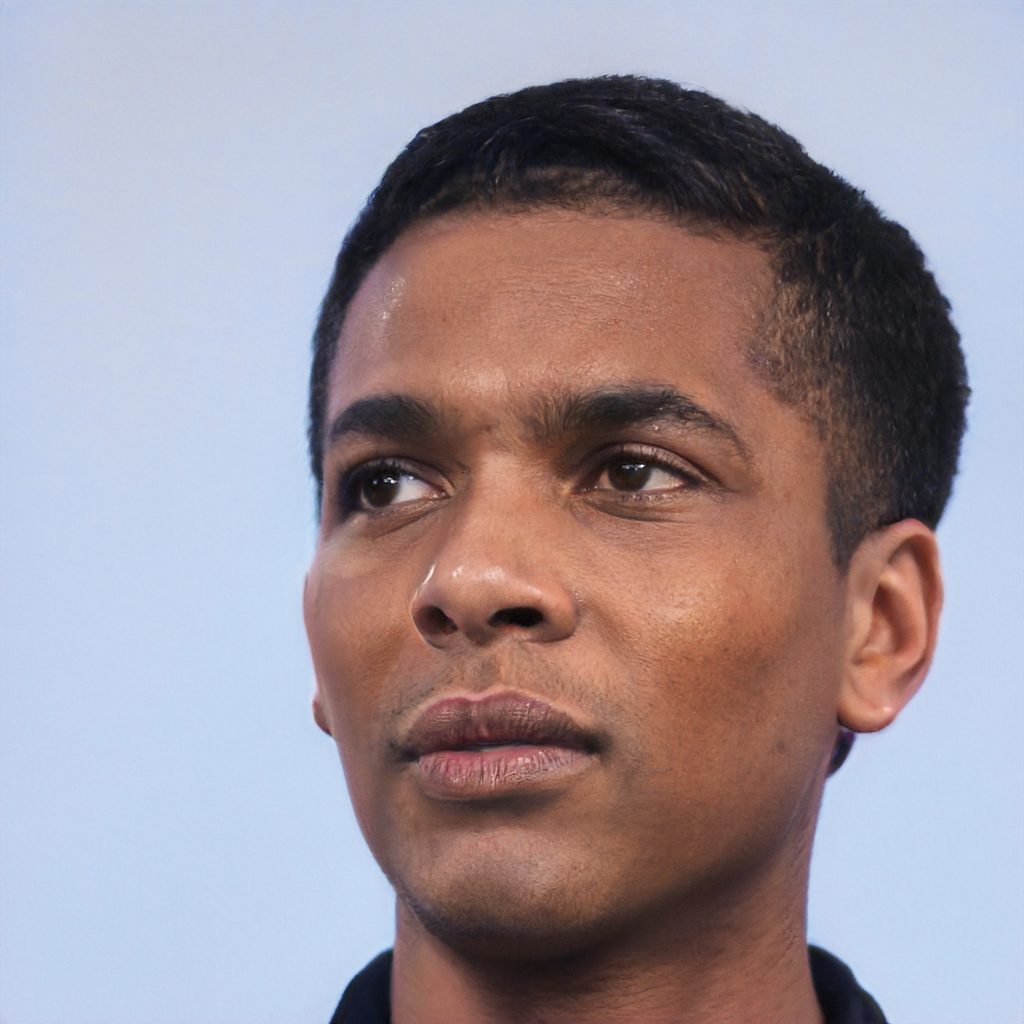

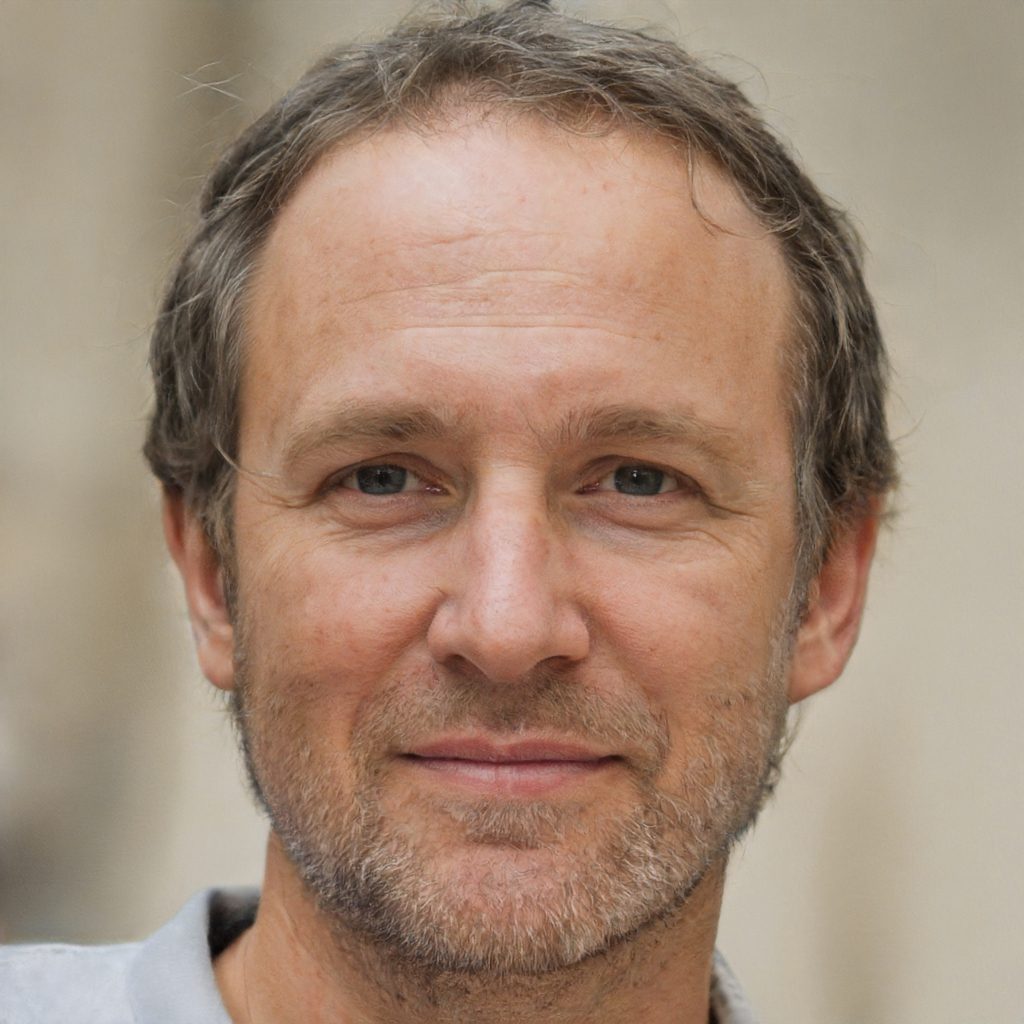

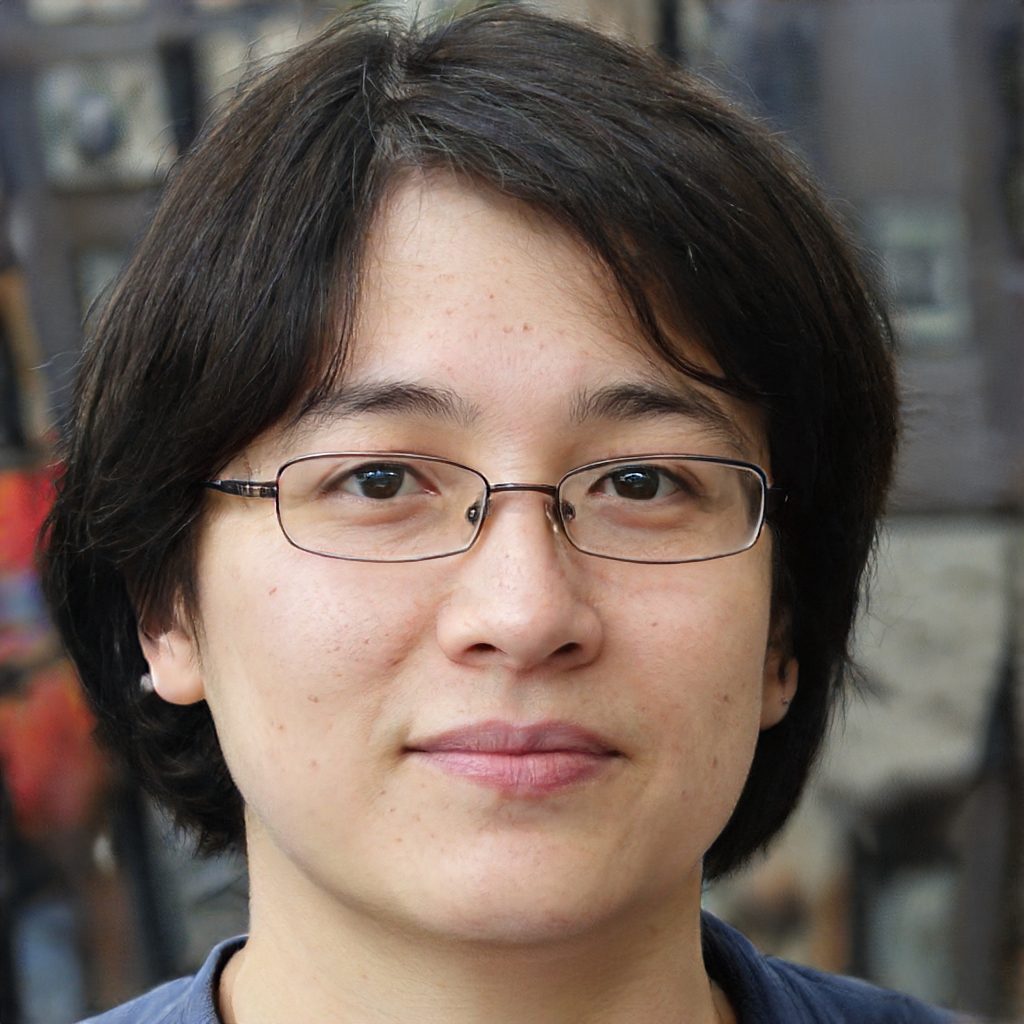

Incredibly, none of the faces pictured above (bar one) belongs to real people. Like ‘deepfakes’ (which use deep learning to make images of fake events), they were generated by an AI programme trained on a database of real images. However, one is real: that of Professor Neil Lawrence. Can you spot the real image hidden in plain sight?

Lawrence was itching to test out his new methods in industry. Unfortunately, industry didn’t have the foresight, in the early 2000s, to realise just how important machine learning would become. So after a short stint at Microsoft, he took up a position at the University of Sheffield, followed by a stint at the University of Manchester, before returning to Sheffield as Chair in Neuroscience and Computer Science. But in 2016, he was tempted back into industry, becoming Amazon Cambridge’s Head of Machine Learning. He stayed at Amazon for three years until the DeepMind professorship came up. “When I’m in industry, what I really want to see is what happens when the rubber hits the road – when stuff gets deployed, goes wrong, so we get on with the next thing and that works. But when there’s a need to step back and reflect on what we should be doing better, that’s better done in academia.”

Along with big-picture thinking – further aided by a Turing Institute AI fellowship – Lawrence is also keen to continue his work with Data Science Africa, which he helped set up in 2013. It’s an initiative supporting the data science community in Africa to deploy machine learning and data science techniques to solve real-world problems, such as monitoring of cassava disease in fields or understanding the distribution of malaria. “This is what I’m talking about: creating effective machine learning systems design. These researchers and scientists in Africa can build the models and devices and do the analysis, but they don’t have a sustainable ecosystem to bring it together, one that doesn’t require thousands of people to maintain it. That’s a really important part of what I will be focusing on.”

He’s also currently working with the Royal Society’s DELVE group, doing what he calls “operational science” around Covid-19. “Having worked at Amazon, trying to answer questions on a weekly basis – that’s very different from academic science and it’s what you need for the pandemic. I’m helping to take the lessons from operational science at Amazon and using them within the group to help give the best advice we can on the understanding of the virus. We are bringing together a great, diverse group, from economists to virologists – there’s a real diversity of expertise.”

Machine learning and AI were born in the computer science lab, says Lawrence, and he’s eager to take them back in there, pull them apart and invent them anew. “You can’t put the genie back in the bottle. So I think the onus is on technical people to talk to society, understand what the problems are, and provide the solutions. And the Cambridge computer lab is absolutely the right place to do it. It might sound overly ambitious. But when I look at the individuals that I’m surrounded by, what they are capable of doing and what they’ve done in the past, I know we can do it.”